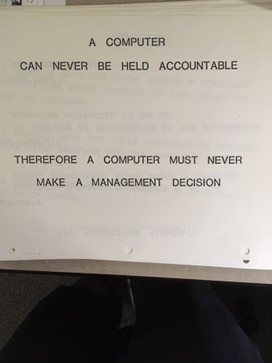

So warned IBM in 1979 in its internal training materials.

So warned IBM in 1979 in its internal training materials.

45 years later, artificial intelligence, at least in the news, cannot be escaped. From OpenAI’s ChatGPT large language model, to Midjourney’s text-to-image generator, the Internet abounds with tools that claim to be able to perform tasks that, until quite recently, required human creativity and spark.

Is this something that we should fear? As the author is acutely aware, the creators of the latest version of ChatGPT claims it can score in the 90th percentile on the Uniform Bar Examination—the test that marks in many states the dividing line between who can and cannot practice law. If you can design a computer program that knows more about the law than do nine out of 10 lawyers, what is the point of a lawyer?

The answer is that lawyers do much, much more than know black-letter law. Simply stated, there is no chat prompt in which you can input an entire case into a large language model. Lawyers analyze a case’s entire landscape—a tapestry that consists of facts (ones that already exist, as well as ones that that need to be proved), emotions (your client’s, the opposing party’s, your own), risks (ones to take, and ones better left untaken), and probabilities. Of these components, some pose tasks that are amenable to AI’s help. Some do not.

There is some malaise in the legal world that much of our industry is at risk of automation via AI. I disagree. Instead, I truly believe that AI has the potential to increase both the amount of legal work there is to do, as well as the efficacy of that work. After all, AI products offer tools that can streamline tasks. And, when lawyers can streamline aspects of their workflow, they can spend more time concentrating on the high-end work their clients value most.

This is not limited to lawyering. Businesses can and should analyze the components of their work processes that would benefit from AI’s input. For example, from an enterprise level, is there a customer feedback process that requires manual review of written responses? A large language model might be able to help with that. Or, do you have products that you inspect in the course of a quality control process? AI vision-recognition systems might offer a hand to your quality control specialists.

However, perhaps the most important benefit to your business from AI tools is not on the enterprise level, but instead accrues to your employees. AI can help them brainstorm ideas for a presentation, outline the skeleton of a letter or email, or review voluminous records—all almost instantaneously. And, if your employees can speed their work on these tasks, they have the time they saved to devote to other tasks. Simply stated, AI can be a multiplier of profitability.

That said, your business might be, if not probably is, subject to constraints that prevent whole-sale automation of tasks, as well as the types of tasks for which AI tools can be used. For example, lawyers are subject to ethical codes that limit the extent to which they can divulge information conveyed to them in confidence. They cannot give that information to AI tools, owned by private entities, any more than they could take a client’s secret and tell it to someone else. When analyzing which business processes might benefit from AI-augmentation, you need to identify the legal or ethical structures that might prevent you from doing so.

Moreover, with creative AI models, no one—including their creators themselves—can perfectly predict their output. The history of business is littered with anachronistic and perhaps apocryphal stories of technological adoption, such as people inspecting the outputted pages from a mimeograph to make sure the machine created a faithful copy. We laugh at those stories now, but that impulse to verify is understandable. Those machines were, at a time, new—cutting edge—and users were not sure what it would produce.

AI creators created their large language models by teaching a computer to place words in a multi-dimensional matrix and assign those words varying values based on their interrelatedness. From there, LLMs can interpret inputs and create responses. However, this description does not give users the ability to fully predict an LLMs output or have faith its output is correct or true. LLMs can, in fact, “hallucinate”—sometimes creating plausible-sounding confabulations that have no basis in reality. Text-to-image generators are sometimes susceptible to durable artifacts in their outputs—images and themes that the user does not want but are nonetheless present for reasons that cannot be explained, but can likely be attributed to the program’s training or prompts given by other users.

All of this circles back to the 1979 IBM slide. Our businesses are made up of and ran by people. While AI tools can be profit-multipliers, computers are not managers. So, like those early mimeograph operators, we can use AI tools for our benefit, but we must verify—at least for now—what they are doing.

Leave A Comment